My July 1 Substack demonstrated how AI can easily write a credible newspaper editorial on a complex subject. The editorial was well written with a logical flow and a strong conclusive ending. Nearly 60 percent of subscribers taking a related poll believed the editorial was fair and balanced. The others were closely divided on the editorial having either a conservative or liberal bias. Many journalists would be proud to have written the editorial.

How does AI do such a complex, and seemingly human, task?

There are two parts to the answer: (1) the Artificial Intelligence program whose step-by-step instructions create intelligent behavior, and (2) the computer system which executes the AI program.

Today, I’m writing about the computer system. Next week, unless current events intervene, I’ll discuss how AI programs work.

At their most basic level, computers are remarkably simple. Unlike, for example, a radio which consists of many different component types—capacitors, resistors, inductors, transistors—even the most complex computer can be constructed from one simple component type.

In 1854, the English mathematician, George Boole, published “An Investigation of the Laws of Thought” which introduced a framework for logical reasoning. Boole proved that all logic was based on three simple functions: AND (all logical values being considered are true), OR (at least one logical value is true), and NOT (flip a logical value from true to false, or vice-versa).

George Boole’s proof that logic, long considered a branch of philosophy, was mathematical in nature was widely acclaimed. Augustus De Morgan, a prominent 19th century mathematician, praised Boole’s contribution to science:

“Boole’s system of logic is but one of many proofs of genius and patience combined. . .That the symbolic processes of algebra, invented as tools of numerical calculation, should be competent to express every act of thought, and to furnish the grammar and dictionary of an all-containing system of logic, would not have been believed until it was proved.”

But during the 19th century, there was little practical application for Boole’s theories, with one exception. Authur Conan Doyle based Sherlock Holmes’s logical approach to solving crimes on George Boole’s binary logic—breaking reality down into simple binary decisions: true or false, possible or impossible.

Decades later, at the dawn of the computer age, theoretician Claude Shannon proved in 1940 that by combining the NOT and AND functions a simple component, a NOT AND gate—NAND gate—could be used as a universal building block. Regardless how complex, any logical or arithmetic problem can be solved using a network of simple NAND gates.

That was a profound discovery. Incredible as it seems, NAND gates alone can construct all the elements of a digital computer.

What’s a NAND gate? Think of a gate to a horse corral. Every morning the rancher opens the gate to let the horses out to graze, unless (1) the weather looks stormy, AND (2) the rancher expects to be away, unable to corral the horses if a storm develops. In that case, the rancher does NOT open the gate.

Whether or not to open the gate is a logical decision in which the corral gate acts as a NAND gate: if events A (stormy weather) AND B (away from ranch) are both true, another event (open the gate) must be inhibited.

This is how computers make decisions, billions of times a second. Even the most complex problems can be reduced to a series of simple NAND-like decisions.

Since George Boole’s logic deals with binary values—yes or no, true or false, one or zero—computers represent numbers as binary digits (known as “bits”).

But there’s a complication. Most problems are iterative in which the problem is solved step-by-step by repeating the same task repeatedly, multiplication and division, for example. In this case, intermediate results need to be stored for use during the next iteration—this requires some type of memory.

And guess what? A memory element to store a single bit can be constructed from two NAND gates by connecting the output of each NAND gate to the input of the other. That simple circuit, known as a flip-flop, can store one bit interpreted either as an arithmetic one or zero, or a logical true or false.

Memory though is needed for more than storing intermediate results. Today’s complex programs and their associated data could fill an Encyclopedia Brittanica many times over. That requires massive amounts of electronic memory.

In theory, the memory holding these huge aggregations of programs and data could be constructed from flip-flops. But today’s computers store both programs and data more efficiently in capacitors which serve as tiny electrical memory cells. Each memory cell, known as a Dynamic Random Access Memory (DRAM) consists of a capacitor and a transistor storing a single bit of data.

These simple NAND and DRAM elements are fabricated on microchips which compose today’s computer systems. A single microchip can hold millions of NAND and DRAM elements. Your smart phone might have a dozen or so microchips while today’s giant supercomputers have thousands.

It’s all fantastical, especially since even the most powerful microchips are primarily constructed from one of the earth’s most common elements, refined silica sand.

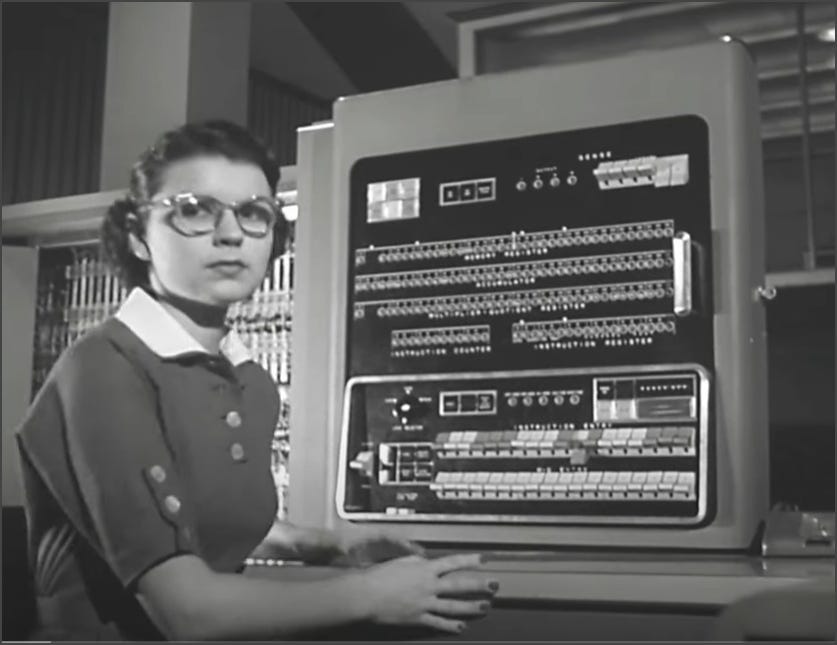

Every computer is composed of five functional units, all constructed from simple NAND and DRAM elements:

An Input Unit for entering data and programs. This might be anything from a keyboard to another computer.

A Storage Unit for storing both data and the programs operating on that data.

An Arithmetic and Logic Unit (ALU) for making calculations and logical decisions.

A Control Unit for sequencing operations across the entire system.

An Output Unit such as a computer screen or another computer.

Together, the Storage Unit, Arithmetic and Logic Unit, and Control Unit are typically referred to as the Central Processing Unit (CPU). Today, nearly all computers, even your smart phone, have multiple CPUs capable of processing programs in parallel.

Central Processing Units process data in discrete steps called clock cycles. These cycles are controlled by electrical pulses distributed throughout the CPU. The interval between clock pulses sets the CPU’s processing speed, much like a metronome helps a musician play in time; but a computer clock ticks as much as three billion times a second slicing time into infinitesimally small segments. Computer clock cycles are so short that during one cycle light only travels four inches. (During a full second, light travels 186,000 miles.)

The complexity and speed of today’s computers would have been incomprehensible to computer engineers seventy-five years ago. Yet the computer itself is, well, dumb as a rock. Even today’s most sophisticated supercomputers would mindlessly play tic-tac-toe for the next century if so instructed. As I’ll discuss next week, it’s the computer programs, millions of simple step-by-step instructions, which create the computer’s skills, and more so every day, growing intelligence.

This is a wonderful and inspiring article! From Boolean logic to NAND gates, from sand to supercomputers, you outlined the "hardware starting point" behind artificial intelligence in clear language. I especially like your analogy of the logic of NAND gates with "horse fences", which makes the abstract concept vivid and easy to understand. Looking forward to the next in-depth analysis of how AI programs are given intelligence

Wow. 😮.